Summary

- Researchers at Wiz.io released a post about a nasty vulnerability in the Kubernetes NGINX ingress controller.

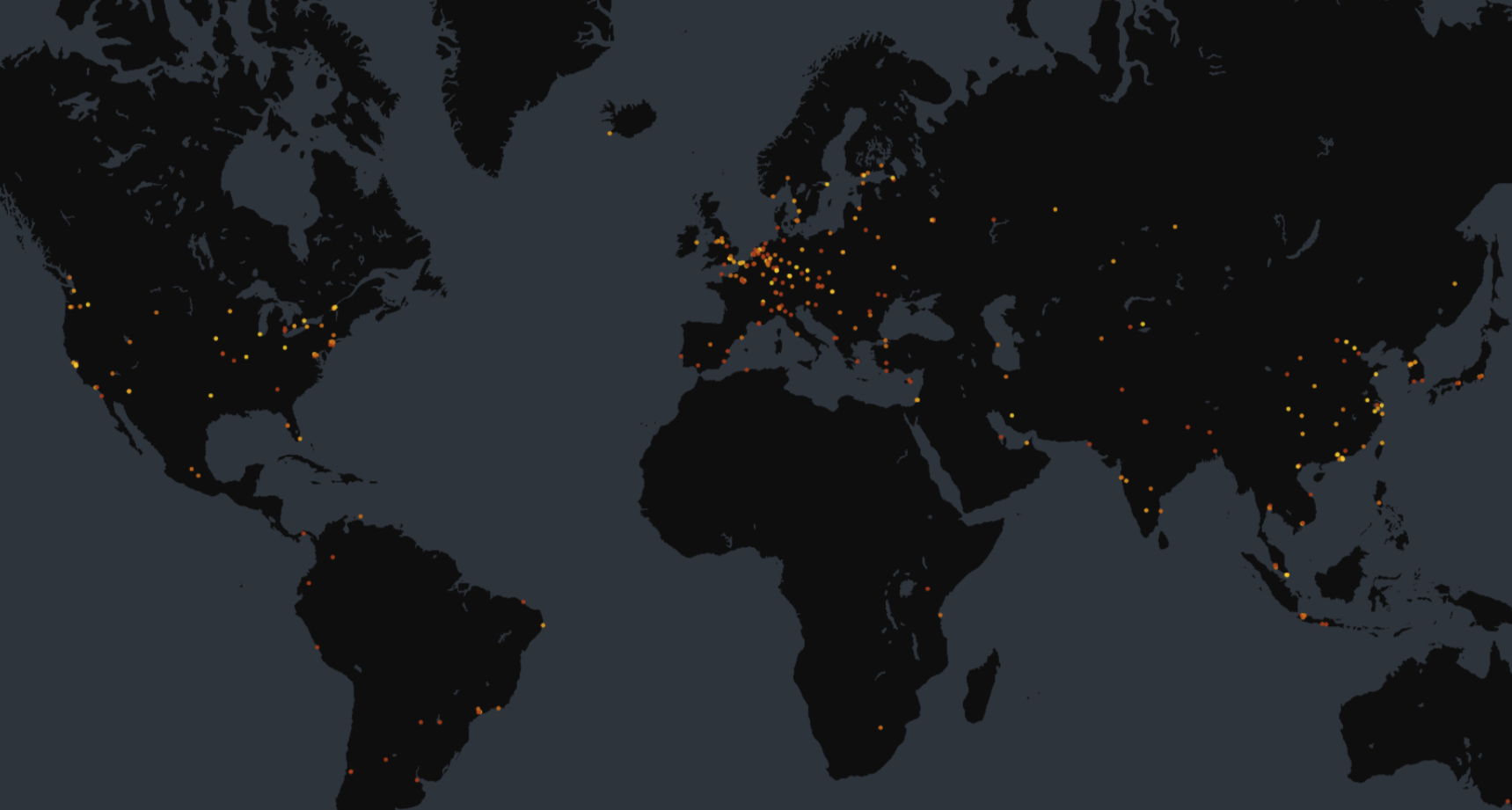

- Around 5,000 hosts were found to be exposing this service directly, potentially leaving them vulnerable to this exploit. (Links: Censys Search, Censys Platform). This does not necessarily mean they are vulnerable, but these ingress controllers should never be exposed to the internet like this, exploitable or not.

Location of exposed NGINX ingress controllers

Details

On March 24, 2025, researchers at wiz.io released some details about an RCE in Kubernetes, specifically in the Kubernetes NGINX ingress controller. While their blog post details this vulnerability very well, and I highly suggest checking it out (as I don’t want to repeat what has already been stated), we would love to add some context around this whole situation.

A bit of history: One of the first projects I assigned myself here at Censys was to examine the state of Kubernetes security from the perspective of the global internet. Mind you, this was back in 2021, and at this time, there were over 500 unauthenticated Kubernetes API servers, which back then didn’t sound like much. However, in contrast, today, only 60 to 70 hosts on the internet have their API servers completely unauthenticated.

It is now as it was then: to make Kubernetes insecure like this, the k8s administrator would have to put in much effort (i.e., manually modify init scripts and API command-line arguments). In other words, you don’t accidentally misconfigure Kubernetes API servers. It takes intent.

So, when I was first approached with this research from Wiz, my first thought was, “Naa, there are plenty of controls around the Kubernetes API; this feels bad, yes, but only in a situation where a user has some sort of local access to the underlying K8s network (like a user sitting on a host sitting in a pod).” – “And even if you were to gain access to an API endpoint somehow, there is always RBAC to stop something such as this.”

However, I didn’t see this vulnerability from the proper perspective. I had assumed that, like most Kubernetes components, requests and responses were routed through the Kubernetes API server, meaning that authorization and authentication were always implied. I did not consider the possibility of (assumed) trusted components of a cluster being directly exposed to the broader internet and, more importantly, having no utility or use on the public internet. And this is the exact issue we’re looking at today.

I do not wish to repeat what Wiz has already covered about the details of the vulnerability, but I will summarize it as follows:

Kubernetes has many components that make up the entirety of a cluster. It’s a complex system that is hard to describe in a one-paragraph summary. But one such component is the Ingress Controller, a service type with its own language defining how to create, modify, and shut down reverse proxies into local services. Kubernetes allows completely separate pieces of software to be authoritative over how the traffic is controlled, and one such (popular) example is the NGINX Ingress Controller. And it is here that we find the root of our problems.

Among many other things, the NGINX ingress controller will create a fully functioning NGINX configuration on the fly using data from an `AdmissionReview` request and a configuration template as the base input. After this configuration has been generated, the controller will call out to the system binary “nginx” to test its validity. Wiz, with all their wizardry, had figured out that they could inject certain types of data into the request that would make it into the final NGINX configuration.

This means that if you have the right network access and the ability to submit one of these admission review requests, you could get the controller to generate a “malicious” NGINX configuration.

In an everyday, sane world, these requests would be done over the API server where all the proper rules apply, but in the upside-down, topsy-turvy world of the internet, where some madman sets up a port-forward to the ingress nginx controller bound to `0.0.0.0`:

❯_ kubectl port-forward -n ingress-nginx ingress-nginx-controller-56d7c84fd4-kktns 8443:8443 –address 0.0.0.0

…You can bypass Kubernetes’ secure structure altogether because when something as simple as this is done, no security at the API level will ever help. Your core ingress controller is directly accessible to the internet. For example, I set up a Kubernetes cluster at `192.168.1.152`, installed an NGINX ingress controller, ran the command above to expose it, and logged into another box I had at `192.168.1.69`.

From `192.168.1.69`, I constructed the smallest `AdmissionReview` object that I could to test whether the admission server would even respond, which I placed into the local file name `h4x.json`:

{

“kind”: “AdmissionReview”,

“apiVersion”: “admission.k8s.io/v1”,

“request”: {

“kind”: {

“group”: “networking.k8s.io”,

“version”: “v1”,

“kind”: “Ingress”

},

“object”: {

“kind”: “Ingress”

},

“dryRun”: true,

“options”: {

“kind”: “CreateOptions”,

“apiVersion”: “meta.k8s.io/v1”

}

}

}

This will result in the smallest generated configuration based on the template the Ingress controller uses, but instead of applying the configuration when it’s marked as valid, it just tells me what the validation is and does not proceed with applying it.

Here, I use curl to communicate directly with the exposed ingress controller from `192.168.1.69`:

~ $ curl -k https://192.168.1.152:8443/validate -H “Content-Type: application/json” -d @h4x.json

{

“kind”: “AdmissionReview”,

“apiVersion”: “admission.k8s.io/v1”,

“request”: {

“uid”: “”,

“kind”: {

“group”: “networking.k8s.io”,

“version”: “v1”,

“kind”: “Ingress”

},

“resource”: {

“group”: “”,

“version”: “”,

“resource”: “”

},

“operation”: “”,

“userInfo”: {},

“object”: {

“kind”: “Ingress”

},

“oldObject”: null,

“dryRun”: true,

“options”: {

“kind”: “CreateOptions”,

“apiVersion”: “meta.k8s.io/v1”

}

},

“response”: {

“uid”: “”,

“allowed”: true

}

}

The key part of this response is the… well, “response” section of this: ”allowed”: true. This tells me that the remote end successfully ingested my request, generated a configuration, and validated it.

If we look at this host through the eyes of a tool like `openssl`, we can quickly see some very unique characteristics of the certificate it returns:

~ $ openssl s_client -connect 192.168.1.152:8443

Connecting to 192.168.1.152

CONNECTED(00000003)

Can’t use SSL_get_servername

depth=0 O=nil2

verify error_num=20:unable to get local issuer certificate

verify return:1

depth=0 O=nil2

verify error_num=21:unable to verify the first certificate

verify return:1

depth=0 O=nil2

verify return:1

—

Certificate chain

0 s_O=nil2

i_O=nil1

a:PKEY: id-ecPublicKey, 256 (bit); sigalg: ecdsa-with-SHA256

v:NotBefore: Mar 25 15:00:25 2025 GMT; NotAfter: Mar 1 15:00:25 2125 GMT

A certificate that can easily be found using Censys search terms:

services: (

tls.certificate.parsed.issuer.organization=`nil1`

and tls.certificate.parsed.subject.organization=`nil2`

and tls.certificate.names: nginx

)

Or in Censys Platform (CenQL) terms:

host.services:(

cert.parsed.issuer.organization=”nil1″

and cert.parsed.subject.organization=”nil2″

and cert.names:”nginx”

)

At the time of writing, there are just under 5,000 hosts (including virtual hosts) that exhibit these characteristics, but as to whether these servers are actually vulnerable or not is outside of the purview of what a non-invasive internet scanner like Censys can do. Yet, if you wish to test whether your servers are vulnerable to this attack, you can verify and validate the steps above without exploiting anything.

Why or how these misconfigured hosts on the internet even came to be is a complete mystery. Once again, much like many other misconfigurations on the internet, we can attribute them to Hanlon’s Razor: “Never attribute to malice what can be attributed to incompetence.” – What could have been a much smaller attack surface when it comes to exploitability–these misconfigured services expand it to infinity.

The Kubernetes maintainers have released an excellent post about this issue, which also goes into upgrading the components, which I will quote here:

“Today, we have released ingress-nginx v1.12.1 and v1.11.5, which have fixes for all five of these vulnerabilities”